Audio-Tactile Virtual Objects

The interaction in AHNE happens in a 3D environment and the content is created with the feedback based on audio and haptics. AHNE uses a depth camera (a Microsoft Kinect, sensor bar) to track the movement of the user in that space. Thus, in its current implementation, the system can be used in any space where the Kinect camera is not exposed to strong infrared light. AHNE also makes use of a hand tracking sensor and a vibrotactile-augmented glove with a finger-flexing feature.

Hand Tracking. Hand tracking was implemented with a Microsoft Kinect Sensor to track the 3D coordinates of user’s hands. These coordinates were later sent as OSC (Open Sound Control) messages and processed in Pure Data (Pd) environment.

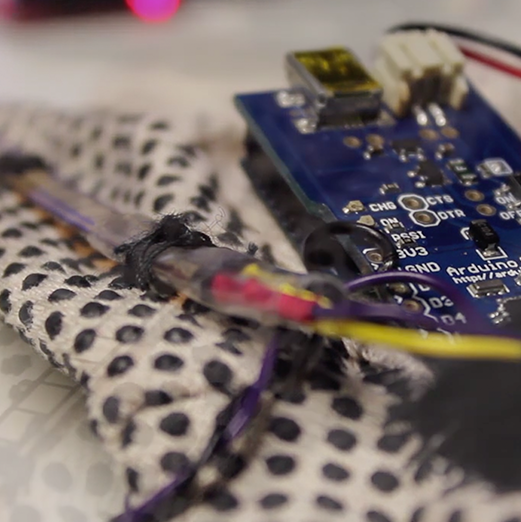

The Gloves. Gloves were chosen as the interface device to provide haptic feedback to the hands and collect sensor data to return to the system. All of the sensors and actuators were controlled by an Arduino Fio microcontroller and embedded into the gloves (Figure 1). Through the components in the glove, a flex sensor detects grabbing, accelerometer outputs the angle of the hand, a vibration motor creates the haptic feedback and Xbee Series1 module sends all the data wirelessly to Pure Data.

Objects. Figure 1(c) shows the layer structure of the Objects that includes an outer sphere and an inner cube. The Objects can be manipulated, that is, grabbed and moved to a different location. Objects can be also set with a fixed position and size in the space, which can be used as a global-controller mapped with features that can affect the whole system, such as a master volume controller for the related content.